What is oterm?

Oterm is a text-based terminal client designed for interacting with the Ollama platform, allowing users to run and manage machine learning models directly from their terminal.

How to use oterm?

To use oterm, ensure that the Ollama server is running, then simply type oterm in your terminal. You can customize the connection settings using environment variables if needed.

Key features of oterm?

- Intuitive terminal UI with no need for additional servers or frontends.

- Supports multiple persistent chat sessions with customizable system prompts and parameters.

- Integration with various tools for enhanced functionality, such as fetching URLs and accessing current weather.

- Ability to customize models and their parameters easily.

Use cases of oterm?

- Running and managing machine learning models from the terminal.

- Creating and editing chat sessions for interactive model inference.

- Utilizing external tools to enhance model capabilities and access real-time data.

FAQ from oterm?

- Is oterm free to use?

Yes! Oterm is open-source and free to use.

- What do I need to run oterm?

You need to have the Ollama server installed and running.

- Can I customize the models used in oterm?

Yes! You can select and customize models, including their system prompts and parameters.

oterm

the text-based terminal client for Ollama.

Features

- intuitive and simple terminal UI, no need to run servers, frontends, just type

otermin your terminal. - supports Linux, MacOS, and Windows and most terminal emulators.

- multiple persistent chat sessions, stored together with system prompt & parameter customizations in sqlite.

- support for Model Context Protocol (MCP) tools & prompts integration.

- can use any of the models you have pulled in Ollama, or your own custom models.

- allows for easy customization of the model's system prompt and parameters.

- supports tools integration for providing external information to the model.

Quick install

uvx oterm

See Installation for more details.

Documentation

What's new

- MCP Sampling is here!

- In-app log viewer for debugging and troubleshooting.

- Support sixel graphics for displaying images in the terminal.

- Support for Model Context Protocol (MCP) tools & prompts!

- Create custom commands that can be run from the terminal using oterm. Each of these commands is a chat, customized to your liking and connected to the tools of your choice.

Screenshots

The splash screen animation that greets users when they start oterm.

The splash screen animation that greets users when they start oterm.

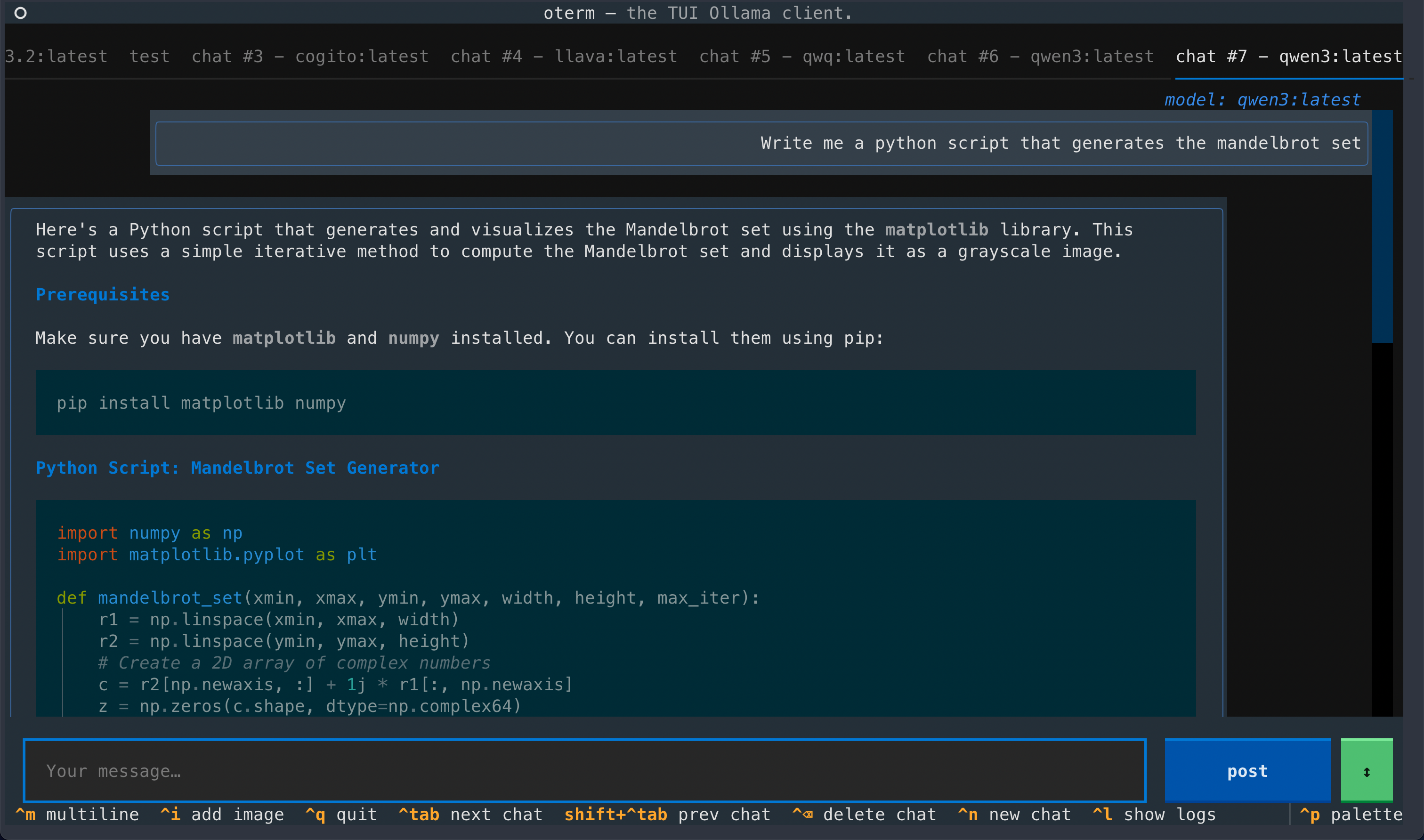

A view of the chat interface, showcasing the conversation between the user and the model.

A view of the chat interface, showcasing the conversation between the user and the model.

git MCP server to access its own repo.

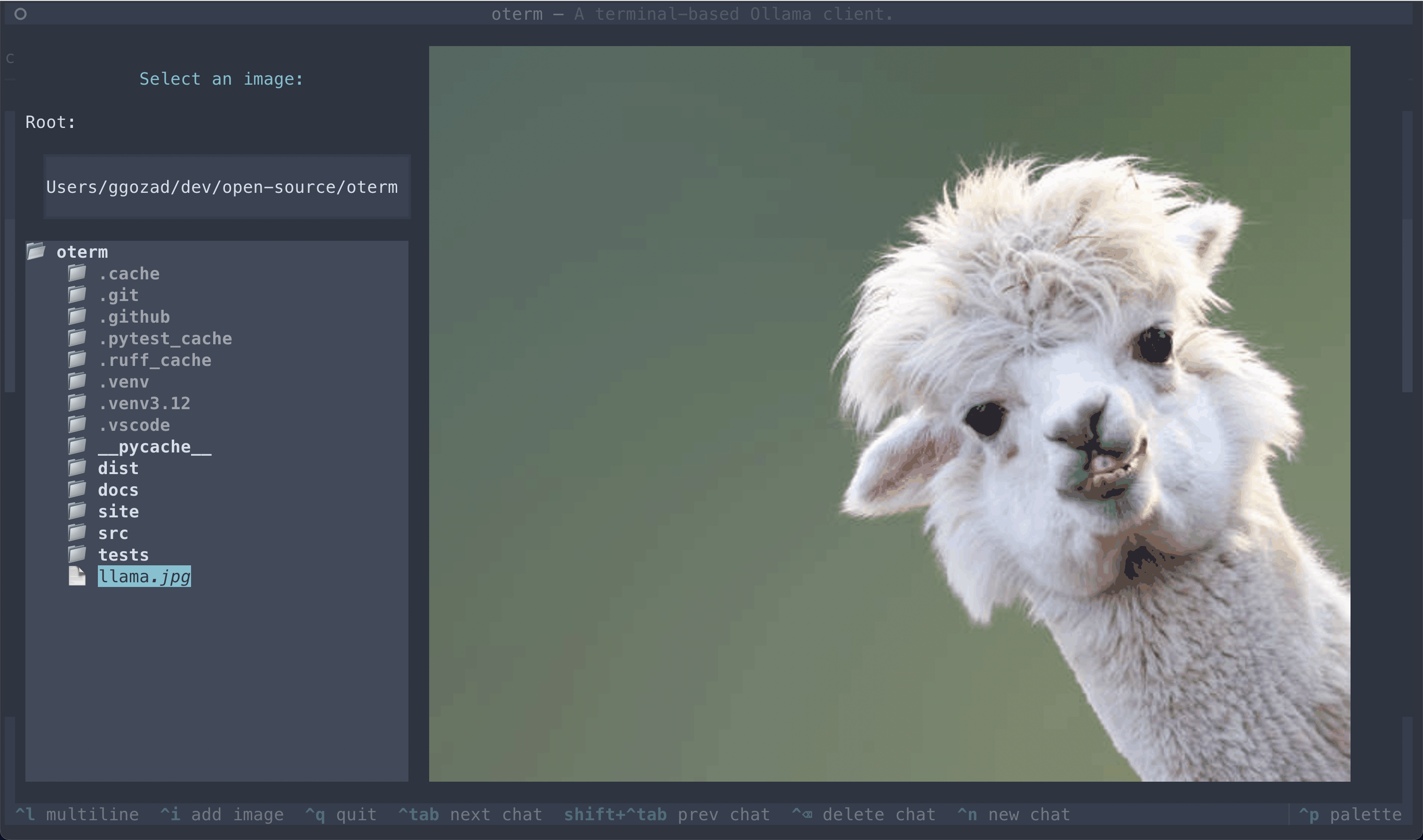

The image selection interface, demonstrating how users can include images in their conversations.

The image selection interface, demonstrating how users can include images in their conversations.

License

This project is licensed under the MIT License.