MaxKB

💬 MaxKB is an open-source AI assistant for enterprise. It seamlessly integrates RAG pipelines, supports robust workflows, and provides MCP tool-use capabilities.

What is MaxKB?

MaxKB is a ready-to-use RAG (Retrieval-Augmented Generation) chatbot that features robust workflow and MCP tool-use capabilities, designed to enhance intelligent customer service, corporate knowledge bases, academic research, and education.

How to use MaxKB?

To use MaxKB, execute the provided Docker command to start a MaxKB container and access the web interface at http://your_server_ip:8080 with the default admin credentials: username: admin, password: MaxKB@123..

Key features of MaxKB?

- Ready-to-Use: Supports direct document uploads and automatic crawling with features for text splitting and vectorization.

- Flexible Orchestration: Equipped with a powerful workflow engine for complex business scenarios.

- Seamless Integration: Zero-coding integration into third-party systems for enhanced Q&A capabilities.

- Model-Agnostic: Supports various large models including private and public options.

- Multi Modal: Supports text, image, audio, and video inputs and outputs.

Use cases of MaxKB?

- Intelligent customer service automation.

- Corporate internal knowledge management.

- Academic research assistance.

- Educational tools for learning.

FAQ from MaxKB?

- What models does MaxKB support?

MaxKB supports a variety of large models including DeepSeek, Llama, Qwen, OpenAI, and more.

- Is MaxKB easy to integrate?

Yes! MaxKB allows for zero-coding integration into existing systems.

- Can I deploy MaxKB on-premise?

Yes, MaxKB supports on-premise deployment.

An Open-Source AI Assistant for Enterprise

MaxKB = Max Knowledge Brain, it is a powerful and easy-to-use AI assistant that integrates Retrieval-Augmented Generation (RAG) pipelines, supports robust workflows, and provides advanced MCP tool-use capabilities. MaxKB is widely applied in scenarios such as intelligent customer service, corporate internal knowledge bases, academic research, and education.

- RAG Pipeline: Supports direct uploading of documents / automatic crawling of online documents, with features for automatic text splitting, vectorization. This effectively reduces hallucinations in large models, providing a superior smart Q&A interaction experience.

- Flexible Orchestration: Equipped with a powerful workflow engine, function library and MCP tool-use, enabling the orchestration of AI processes to meet the needs of complex business scenarios.

- Seamless Integration: Facilitates zero-coding rapid integration into third-party business systems, quickly equipping existing systems with intelligent Q&A capabilities to enhance user satisfaction.

- Model-Agnostic: Supports various large models, including private models (such as DeepSeek, Llama, Qwen, etc.) and public models (like OpenAI, Claude, Gemini, etc.).

- Multi Modal: Native support for input and output text, image, audio and video.

Quick start

Execute the script below to start a MaxKB container using Docker:

docker run -d --name=maxkb --restart=always -p 8080:8080 -v ~/.maxkb:/var/lib/postgresql/data -v ~/.python-packages:/opt/maxkb/app/sandbox/python-packages 1panel/maxkb

Access MaxKB web interface at http://your_server_ip:8080 with default admin credentials:

- username: admin

- password: MaxKB@123..

中国用户如遇到 Docker 镜像 Pull 失败问题,请参照该 离线安装文档 进行安装。

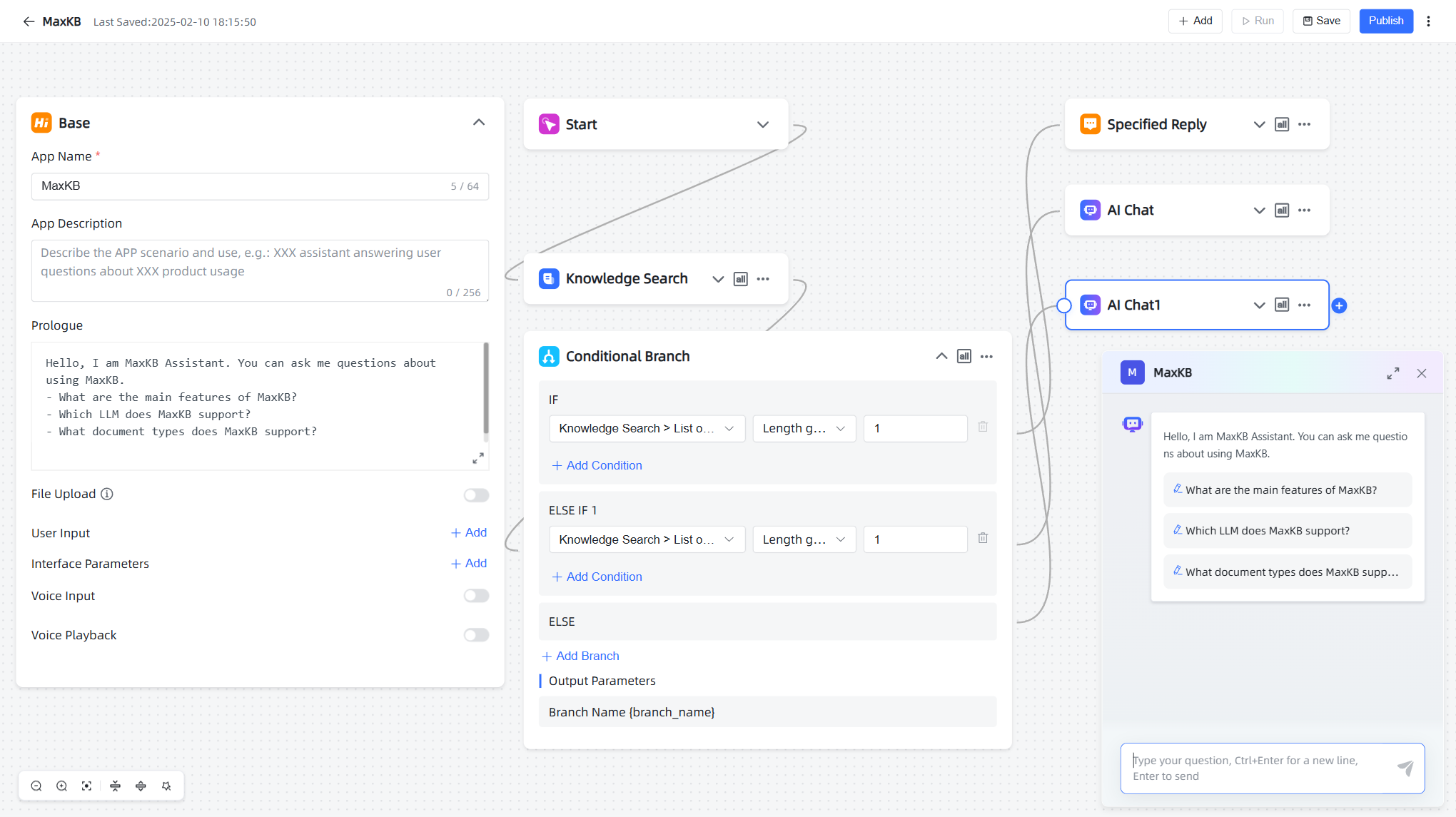

Screenshots

|  |

|  |

Technical stack

- Frontend:Vue.js

- Backend:Python / Django

- LLM Framework:LangChain

- Database:PostgreSQL + pgvector

Feature Comparison

MaxKB is positioned as an Ready-to-use RAG (Retrieval-Augmented Generation) intelligent Q&A application, rather than a middleware platform for building large model applications. The following table is merely a comparison from a functional perspective.

| Feature | LangChain | Dify.AI | Flowise | MaxKB (Built upon LangChain) |

|---|---|---|---|---|

| Supported LLMs | Rich Variety | Rich Variety | Rich Variety | Rich Variety |

| RAG Engine | ✅ | ✅ | ✅ | ✅ |

| Agent | ✅ | ✅ | ❌ | ✅ |

| Workflow | ❌ | ✅ | ✅ | ✅ |

| Observability | ✅ | ✅ | ❌ | ✅ |

| SSO/Access control | ❌ | ✅ | ❌ | ✅ (Pro) |

| On-premise Deployment | ✅ | ✅ | ✅ | ✅ |

Star History

License

Licensed under The GNU General Public License version 3 (GPLv3) (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

https://www.gnu.org/licenses/gpl-3.0.html

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.